No

enum Position {

TOP = 'TOP',

BOTTOM = 'BOTTOM',

}This might sound evident, but it needs to be here because there can’t be another first step to optimization: if you’re trying to optimize, you should first look into avoiding work. This includes concepts like memoization, laziness and incremental computation. This would be applied differently depending on the context. In React, for example, that would mean applying memo(), useMemo() and other applicable primitives.

我经常感觉 javascript 代码的运行速度比实际要慢得多,这仅仅是因为它没有得到适当的优化。以下是我发现有用的常见优化技术的摘要。请注意,性能的权衡通常是可读性,因此何时考虑性能与可读性的问题是留给读者的问题。我还要指出,谈论优化必然需要谈论基准测试。如果函数仅代表实际总体运行时间的一小部分,那么对函数进行几个小时的微优化以使其运行速度提高 100 倍是没有意义的。如果要进行优化,第一步也是最重要的一步是基准测试。我将在后面的要点中介绍该主题。另请注意,微基准通常存在缺陷,其中可能包括此处介绍的那些。我已尽力避免这些陷阱,但不要在没有基准测试的情况下盲目应用此处提出的任何要点。

I have included runnable examples for all cases where it’s possible. They show by default the results I got on my machine (brave 122 on archlinux) but you can run them yourself. As much as I hate to say it, Firefox has fallen a bit behind in the optimization game, and represents a very small fraction of the traffic for now, so I don’t recommend using the results you’d get on Firefox as useful indicators.

我已经为所有可能的情况提供了可运行的示例。它们默认显示我在我的机器上得到的结果(archlinux 上的 brave 122),但你可以自己运行它们。尽管我不想这么说,但 Firefox 在优化游戏中已经落后了一点,并且目前仅占流量的一小部分,因此我不建议使用在 Firefox 上获得的结果作为有用的指标。

This might sound evident, but it needs to be here because there can’t be another first step to optimization: if you’re trying to optimize, you should first look into avoiding work. This includes concepts like memoization, laziness and incremental computation. This would be applied differently depending on the context. In React, for example, that would mean applying memo(), useMemo() and other applicable primitives.

这听起来可能很明显,但它需要在这里,因为不可能有另一个优化的第一步:如果你想优化,你应该首先考虑避免工作。这包括记忆、惰性和增量计算等概念。根据上下文,这将有不同的应用。例如,在 React 中,这意味着应用memo() 、 useMemo()和其他适用的原语。

Javascript makes it easy to hide the real cost of string comparisons. If you need to compare strings in C, you’d use the strcmp(a, b) function. Javascript uses === instead, so you don’t see the strcmp. But it’s there, and a string comparison will usually (but not always) require comparing each of the characters in the string with the ones in the other string; string comparison is O(n). One common JavaScript pattern to avoid is strings-as-enums. But with the advent of TypeScript this should be easily avoidable, as enums are integers by default.

JavaScript 可以轻松隐藏字符串比较的实际成本。如果需要比较 C 中的字符串,可以使用strcmp(a, b)函数。 Javascript 使用===代替,因此您看不到strcmp 。但它就在那里,字符串比较通常(但并非总是)需要将字符串中的每个字符与另一个字符串中的字符进行比较;字符串比较的时间复杂度为O(n) 。一种需要避免的常见 JavaScript 模式是字符串作为枚举。但随着 TypeScript 的出现,这种情况应该很容易避免,因为枚举默认是整数。

No

enum Position {

TOP = 'TOP',

BOTTOM = 'BOTTOM',

}Yeppers

enum Position {

TOP, // = 0

BOTTOM, // = 1

}Here is a comparison of the costs:

以下是成本比较:

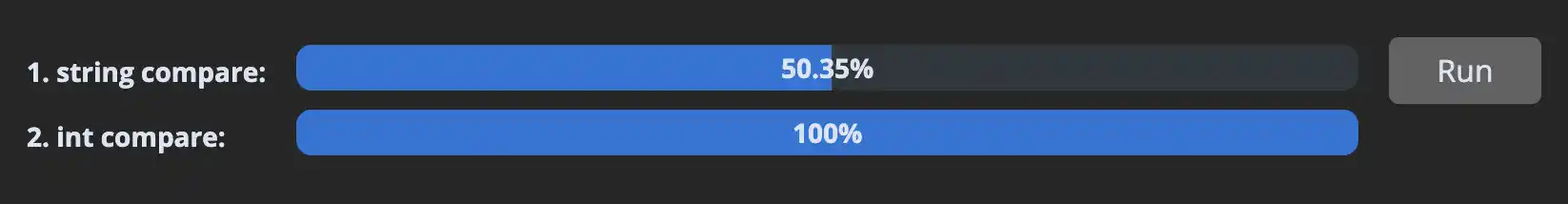

1. string compare

const Position = {

TOP: 'TOP',

BOTTOM: 'BOTTOM',

}

let _ = 0

for (let i = 0; i < 1000000; i++) {

let current = i % 2 === 0 ?

Position.TOP : Position.BOTTOM

if (current === Position.TOP)

_ += 1

}2. int compare

const Position = {

TOP: 0,

BOTTOM: 1,

}

let _ = 0

for (let i = 0; i < 1000000; i++) {

let current = i % 2 === 0 ?

Position.TOP : Position.BOTTOM

if (current === Position.TOP)

_ += 1

}

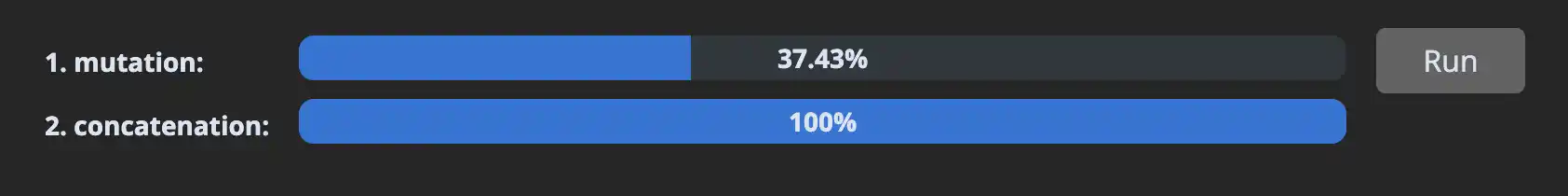

Percentage results represent the number of operations completed within 1s, divided by the number of operations of the highest scoring case. Higher is better.

百分比结果表示 1 秒内完成的操作数除以最高得分案例的操作数。越高越好。

As you can see, the difference can be significant. The difference isn’t necessarily due to the strcmp cost as engines can sometimes use a string pool and compare by reference, but it’s also due to the fact that integers are usually passed by value in JS engines, whereas strings are always passed as pointers, and memory accesses are expensive (see section 5). In string-heavy code, this can have a huge impact.

正如您所看到的,差异可能很大。差异不一定是由于strcmp成本造成的,因为引擎有时可以使用字符串池并通过引用进行比较,但这也是由于 JS 引擎中整数通常按值传递,而字符串总是作为指针传递,并且内存访问的成本很高(参见第 5 节)。在字符串较多的代码中,这可能会产生巨大的影响。

For a real-world example, I was able to make this JSON5 javascript parser run 2x faster*just by replacing string constants with numbers.

举一个现实世界的例子,我只需用数字替换字符串常量,就可以使这个 JSON5 javascript 解析器的运行速度提高 2 倍*。

*Unfortunately it wasn’t merged, but that’s how open-source is.

*不幸的是它没有被合并,但这就是开源的方式。

Javascript engines try to optimize code by assuming that objects have a specific shape, and that functions will receive objects of the same shape. This allows them to store the keys of the shape once for all objects of that shape, and the values in a separate flat array. To represent it in javascript:

JavaScript 引擎尝试通过假设对象具有特定形状并且函数将接收相同形状的对象来优化代码。这允许他们为该形状的所有对象存储一次形状的键,并将值存储在单独的平面数组中。用 javascript 表示它:

const objects = [

{

name: 'Anthony',

age: 36,

},

{

name: 'Eckhart',

age: 42

}

]const shape = [

{ name: 'name', type: 'string' },

{ name: 'age', type: 'integer' },

]

const objects = [

['Anthony', 36],

['Eckhart', 42],

]on terminology 关于术语的注释

I have used the word “shape” for this concept, but be aware that you may also find “hidden class” or “map” used to describe it, depending on the engine.

我使用了“形状”这个词来表达这个概念,但请注意,您可能还会发现*“隐藏类”或“映射”*用于描述它,具体取决于引擎。

For example, at runtime if the following function receives two objects with the shape { x: number, y: number }, the engine is going to speculate that future objects will have the same shape, and generate machine code optimized for that shape.

例如,在运行时,如果以下函数接收形状为{ x: number, y: number }两个对象,则引擎将推测未来的对象将具有相同的形状,并生成针对该形状优化的机器代码。

function add(a, b) {

return {

x: a.x + b.x,

y: a.y + b.y,

}

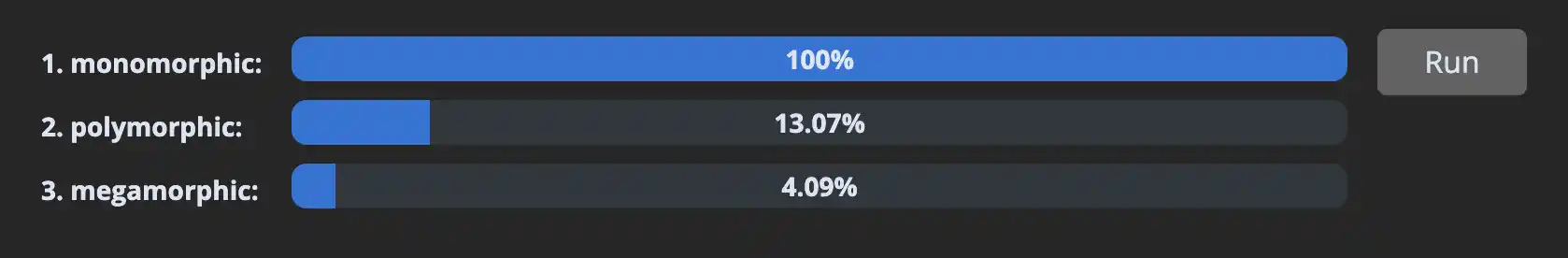

}If one would instead pass an object not with the shape { x, y } but with the shape { y, x }, the engine would need to undo its speculation and the function would suddenly become considerably slower. I’m going to limit my explanation here because you should read the excellent post from mraleph if you want more details, but I’m going to highlight that V8 in particular has 3 modes, for accesses that are: monomorphic (1 shape), polymorphic (2-4 shapes), and megamorphic (5+ shapes). Let’s say you really want to stay monomorphic, because the slowdown is drastic:

如果传递的对象不是形状为{ x, y }而是形状为{ y, x }的对象,则引擎将需要撤消其推测,并且该函数会突然变得相当慢。我将在这里限制我的解释,因为如果您想了解更多详细信息,您应该阅读mraleph 的精彩文章,但我要强调的是,V8 特别具有 3 种模式,用于访问:单态(1 种形状)、多态(2-4 个形状)和超态(5 个以上形状)。假设你真的想保持单态,因为速度减慢得很厉害:

// setup

let _ = 0

// 1. monomorphic

const o1 = { a: 1, b: _, c: _, d: _, e: _ }

const o2 = { a: 1, b: _, c: _, d: _, e: _ }

const o3 = { a: 1, b: _, c: _, d: _, e: _ }

const o4 = { a: 1, b: _, c: _, d: _, e: _ }

const o5 = { a: 1, b: _, c: _, d: _, e: _ } // all shapes are equal

// 2. polymorphic

const o1 = { a: 1, b: _, c: _, d: _, e: _ }

const o2 = { a: 1, b: _, c: _, d: _, e: _ }

const o3 = { a: 1, b: _, c: _, d: _, e: _ }

const o4 = { a: 1, b: _, c: _, d: _, e: _ }

const o5 = { b: _, a: 1, c: _, d: _, e: _ } // this shape is different

// 3. megamorphic

const o1 = { a: 1, b: _, c: _, d: _, e: _ }

const o2 = { b: _, a: 1, c: _, d: _, e: _ }

const o3 = { b: _, c: _, a: 1, d: _, e: _ }

const o4 = { b: _, c: _, d: _, a: 1, e: _ }

const o5 = { b: _, c: _, d: _, e: _, a: 1 } // all shapes are different

// test case

function add(a1, b1) {

return a1.a + a1.b + a1.c + a1.d + a1.e + b1.a + b1.b + b1.c + b1.d + b1.e

}

let result = 0

for (let i = 0; i < 1000000; i++) {

result += add(o1, o2)

result += add(o3, o4)

result += add(o4, o5)

}

Easier said than done but: create all your objects with the exact same shape. Even something as trivial as writing your React component props in a different order can trigger this.

说起来容易做起来难,但是:用完全相同的形状创建所有对象。即使是像以不同顺序编写 React 组件 props 这样微不足道的事情也可能会触发此问题。

For example, here are simple cases I found in React’s codebase, but they already had a much higher impact case of the same problem a few years ago because they were initializing an object with an integer, then later storing a float. Yes, changing the type also changes the shape. Yes, there are integer and float types hidden behind number. Deal with it.

例如,以下是我在 React 代码库中发现的简单案例,但几年前它们已经对同一问题产生了更高影响的案例,因为它们使用整数初始化对象,然后存储浮点数。是的,改变类型也会改变形状。是的, number后面隐藏着整数和浮点类型。处理它。

Number representation 数字表示

Engines can usually encode integers as values. For example, V8 represents values in 32 bits, with integers as compact Smi (SMall Integer) values, but floats and large integers are passed as pointers just like strings and objects. JSC uses a 64 bit encoding, double tagging, to pass all numbers by value, as SpiderMonkey does, and the rest is passed as pointers.

引擎通常可以将整数编码为值。例如,V8 表示 32 位的值,整数作为紧凑的Smi (SMall Integer) 值,但浮点数和大整数作为指针传递,就像字符串和对象一样。 JSC 使用 64 位编码、双重标记来按值传递所有数字,就像 SpiderMonkey 所做的那样,其余的则作为指针传递。

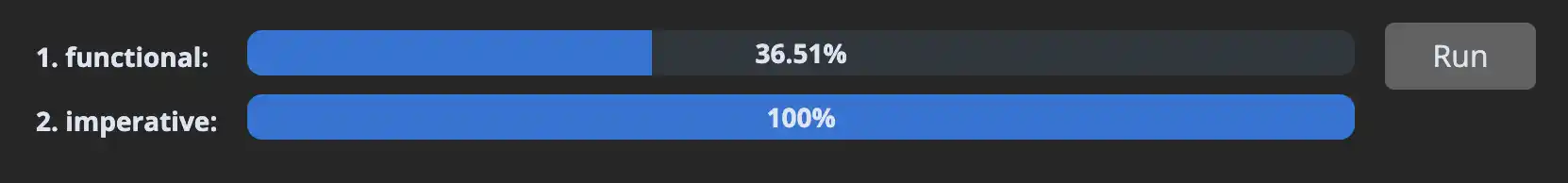

I love functional programming as much as anyone else, but unless you’re working in Haskell/OCaml/Rust where functional code gets compiled to efficient machine code, functional will always be slower than imperative.

我和其他人一样喜欢函数式编程,但除非您在 Haskell/OCaml/Rust 中工作,其中函数式代码被编译为高效的机器代码,否则函数式编程总是比命令式慢。

const result =

[1.5, 3.5, 5.0]

.map(n => Math.round(n))

.filter(n => n % 2 === 0)

.reduce((a, n) => a + n, 0)The problem with those methods is that:

这些方法的问题在于:

for loop would allow looping once. for循环允许循环一次。// setup:

const numbers = Array.from({ length: 10_000 }).map(() => Math.random())

// 1. functional

const result =

numbers

.map(n => Math.round(n * 10))

.filter(n => n % 2 === 0)

.reduce((a, n) => a + n, 0)

// 2. imperative

let result = 0

for (let i = 0; i < numbers.length; i++) {

let n = Math.round(numbers[i] * 10)

if (n % 2 !== 0) continue

result = result + n

}

Object methods such as Object.values(), Object.keys() and Object.entries() suffer from similar problems, as they also allocate more data, and memory accesses are the root of all performance issues. No really I swear, I’ll show you in section 5.

Object.values() 、 Object.keys() 和 Object.entries() 等对象方法也遇到类似的问题,因为它们也分配更多的数据,而内存访问是所有性能问题的根源。不,我真的发誓,我会在第 5 节中向您展示。

Another place to look for optimization gains is any source of indirection, of which I can see 3 main sources: 另一个寻找优化收益的地方是任何间接来源,我可以看到其中 3 个主要来源:

const point = { x: 10, y: 20 }

// 1.

// Proxy objects are harder to optimize because their get/set function might

// be running custom logic, so engines can't make their usual assumptions.

const proxy = new Proxy(point, { get: (t, k) => { return t[k] } })

// Some engines can make proxy costs disappear, but those optimizations are

// expensive to make and can break easily.

const x = proxy.x

// 2.

// Usually ignored, but accessing an object via `.` or `[]` is also an

// indirection. In easy cases, the engine may very well be able to optimize the

// cost away:

const x = point.x

// But each additional access multiplies the cost, and makes it harder for the

// engine to make assumptions about the state of `point`:

const x = this.state.circle.center.point.x

// 3.

// And finally, function calls can also have a cost. Engine are generally good

// at inlining these:

function getX(p) { return p.x }

const x = getX(p)

// But it's not guaranteed that they can. In particular if the function call

// isn't from a static function but comes from e.g. an argument:

function Component({ point, getX }) {

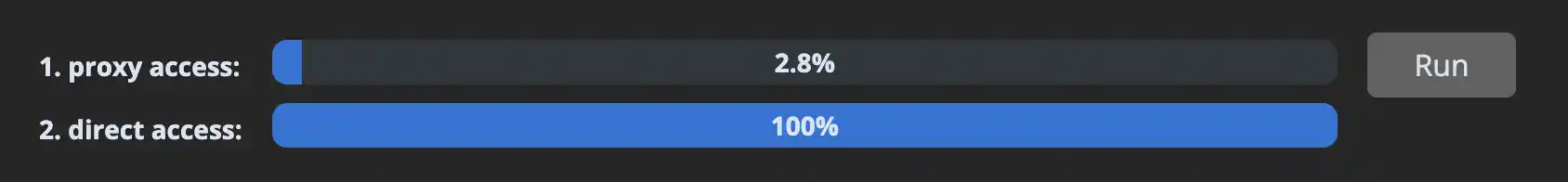

return getX(point)

}The proxy benchmark is particularly brutal on V8 at the moment. Last time I checked, proxy objects were always falling back from the JIT to the interpreter, seeing from those results it might still be the case.

目前 V8 上的代理基准测试尤其残酷。上次我检查时,代理对象总是从 JIT 回退到解释器,从这些结果来看,情况可能仍然如此。

// 1. proxy access

const point = new Proxy({ x: 10, y: 20 }, { get: (t, k) => t[k] })

for (let _ = 0, i = 0; i < 100_000; i++) { _ += point.x }

// 2. direct access

const point = { x: 10, y: 20 }

const x = point.x

for (let _ = 0, i = 0; i < 100_000; i++) { _ += x }

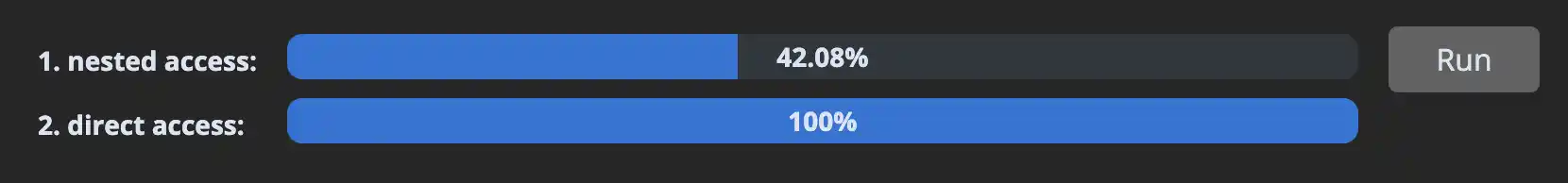

I also wanted to showcase accessing a deeply nested object vs direct access, but engines are very good at optimizing away object accesses via escape analysis when there is a hot loop and a constant object. I inserted a bit of indirection to prevent it.

我还想展示访问深度嵌套对象与直接访问,但是当存在热循环和常量对象时,引擎非常擅长通过逃逸分析来优化对象访问。我插入了一些间接的方法来防止这种情况发生。

// 1. nested access

const a = { state: { center: { point: { x: 10, y: 20 } } } }

const b = { state: { center: { point: { x: 10, y: 20 } } } }

const get = (i) => i % 2 ? a : b

let result = 0

for (let i = 0; i < 100_000; i++) {

result = result + get(i).state.center.point.x }

// 2. direct access

const a = { x: 10, y: 20 }.x

const b = { x: 10, y: 20 }.x

const get = (i) => i % 2 ? a : b

let result = 0

for (let i = 0; i < 100_000; i++) {

result = result + get(i)

}

This point requires a bit of low-level knowledge, but has implications even in javascript, so I’ll explain. From the CPU point of view, retrieving memory from RAM is slow. To speed things up, it uses mainly two optimizations.

这一点需要一些低级知识,但即使在 javascript 中也有影响,所以我会解释一下。从 CPU 的角度来看,从 RAM 中检索内存的速度很慢。为了加快速度,它主要使用两种优化。

The first one is prefetching: it fetches more memory ahead of time, in the hope that it’s the memory you’ll be interested in. It always guesses that if you request one memory address, you’ll be interested in the memory region that comes right after that. So accessing data sequentially is the key. In the following example, we can observe the impact of accessing memory in random order.

第一个是预取:它提前获取更多内存,希望这是您感兴趣的内存。它总是猜测,如果您请求一个内存地址,您会对随后出现的内存区域感兴趣。就在那之后。所以顺序访问数据是关键。在下面的示例中,我们可以观察以随机顺序访问内存的影响。

// setup:

const K = 1024

const length = 1 * K * K

// Theses points are created one after the other, so they are allocated

// sequentially in memory.

const points = new Array(length)

for (let i = 0; i < points.length; i++) {

points[i] = { x: 42, y: 0 }

}

// This array contains the *same data* as above, but shuffled randomly.

const shuffledPoints = shuffle(points.slice())

// 1. sequential

let _ = 0

for (let i = 0; i < points.length; i++) { _ += points[i].x }

// 2. random

let _ = 0

for (let i = 0; i < shuffledPoints.length; i++) { _ += shuffledPoints[i].x }

What the eff should I do about this? 我该怎么办呢?

This aspect is probably the hardest to put in practice, because javascript doesn’t have a way of placing objects in memory, but you can use that knowledge to your advantage as in the example above, for example to operate on data before re-ordering or sorting it. You cannot assume that objects created sequentially will stay at the same location after some time because the garbage collector might move them around. There is one exception to that, and it’s arrays of numbers, preferably TypedArray instances:

这方面可能是最难付诸实践的,因为 javascript 没有办法将对象放入内存中,但是您可以利用这些知识来发挥自己的优势,如上面的示例所示,例如在重新排序之前对数据进行操作或对其进行排序。您不能假设顺序创建的对象在一段时间后将保留在同一位置,因为垃圾收集器可能会移动它们。有一个例外,它是数字数组,最好是TypedArray实例:

// from this

const points = [{ x: 0, y: 5 }, { x: 0, y: 10 }]

// to this

const points = new Int64Array([0, 5, 0, 10])For a more detailed example, see this link*.

有关更详细的示例,请参阅此链接* 。

*Note that it contains some optimizations that are now outdated, but it’s still accurate overall.

*请注意,它包含一些现已过时的优化,但总体而言仍然准确。

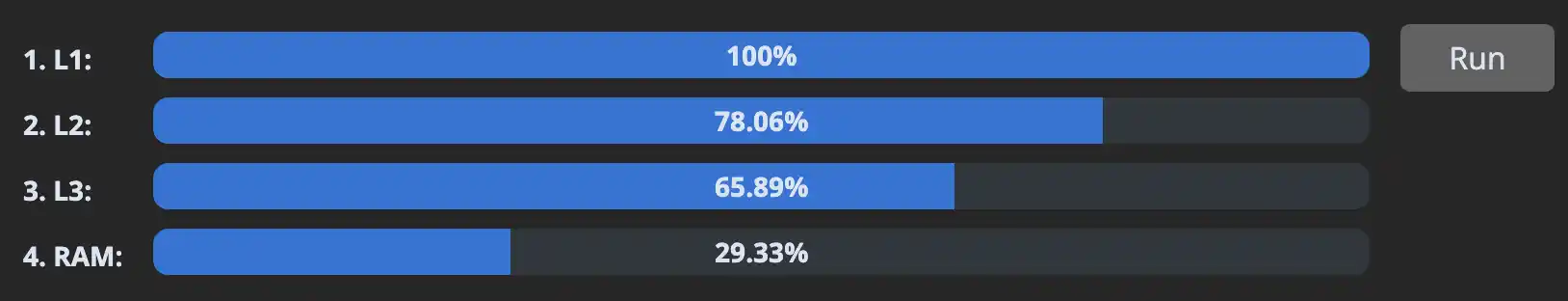

The second optimization CPUs use is the L1/L2/L3 caches: those are like faster RAMs, but they are also more expensive, so they are much smaller. They contain RAM data, but act as an LRU cache. Data comes in while it’s “hot” (being worked on), and is written back to the main RAM when new working data needs the space. So the key here is use as little data as possible to keep your working dataset in the fast caches. In the following example, we can observe what are the effects of busting each of the successive caches.

CPU 使用的第二个优化是 L1/L2/L3 缓存:它们就像更快的 RAM,但它们也更昂贵,因此它们要小得多。它们包含 RAM 数据,但充当 LRU 缓存。数据在“热”(正在处理)时进入,并在新的工作数据需要空间时写回主 RAM。因此,这里的关键是使用尽可能少的数据来将工作数据集保留在快速缓存中。在下面的示例中,我们可以观察破坏每个连续缓存的影响。

// setup:

const KB = 1024

const MB = 1024 * KB

// These are approximate sizes to fit in those caches. If you don't get the

// same results on your machine, it might be because your sizes differ.

const L1 = 256 * KB

const L2 = 5 * MB

const L3 = 18 * MB

const RAM = 32 * MB

// We'll be accessing the same buffer for all test cases, but we'll

// only be accessing the first 0 to `L1` entries in the first case,

// 0 to `L2` in the second, etc.

const buffer = new Int8Array(RAM)

buffer.fill(42)

const random = (max) => Math.floor(Math.random() * max)

// 1. L1

let r = 0; for (let i = 0; i < 100000; i++) { r += buffer[random(L1)] }

// 2. L2

let r = 0; for (let i = 0; i < 100000; i++) { r += buffer[random(L2)] }

// 3. L3

let r = 0; for (let i = 0; i < 100000; i++) { r += buffer[random(L3)] }

// 4. RAM

let r = 0; for (let i = 0; i < 100000; i++) { r += buffer[random(RAM)] }

What the eff should I do about this? 我该怎么办呢?

Ruthlessly eliminate every single data or memory allocations that can be eliminated. The smaller your dataset is, the faster your program will run. Memory I/O is the bottleneck for 95% of programs. Another good strategy can be to split your work into chunks, and ensure you work on a small dataset at a time.

无情地消除每一个可以消除的数据或内存分配。数据集越小,程序运行得越快。内存 I/O 是 95% 的程序的瓶颈。另一个好的策略是将您的工作分成多个块,并确保您一次处理一个小数据集。

For more details on CPU and memory, see this link. 有关 CPU 和内存的更多详细信息,请参阅此链接。

About immutable data structures 关于不可变数据结构

Immutability is great for clarity and correctness, but in the context of performance, updating an immutable data structure means making a copy of the container, and that’s more memory I/O that will flush your caches. You should avoid immutable data structures when possible.

不可变性对于清晰度和正确性来说非常有用,但在性能方面,更新不可变数据结构意味着创建容器的副本,而更多的内存 I/O 会刷新缓存。您应该尽可能避免不可变的数据结构。

About the { ...spread } operator 关于 { ...spread } 运算符

It’s very convenient, but every time you use it you create a new object in memory. More memory I/O, slower caches!

它非常方便,但每次使用它时都会在内存中创建一个新对象。更多内存 I/O,更慢的缓存!

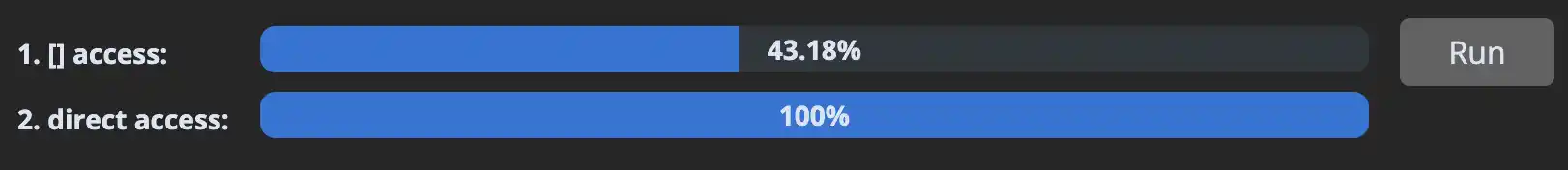

As explained in section 2, engines use shapes to optimize objects. However, when the shape grows too large, the engine has no choice but to use a regular hashmap (like a Map object). And as we saw in section 5, cache misses decrease performance significantly. Hashmaps are prone to this because their data is usually randomly & evenly distributed over the memory region they occupy. Let’s see how it behaves with this map of some users indexed by their ID.

正如第 2 节中所解释的,引擎使用形状来优化对象。然而,当形状变得太大时,引擎别无选择,只能使用常规的哈希图(如Map对象)。正如我们在第 5 节中看到的,缓存未命中会显着降低性能。哈希图很容易出现这种情况,因为它们的数据通常是随机且均匀分布在它们占用的内存区域上的。让我们看看它在按 ID 索引的一些用户的地图上的表现如何。

// setup:

const USERS_LENGTH = 1_000

// setup:

const byId = {}

Array.from({ length: USERS_LENGTH }).forEach((_, id) => {

byId[id] = { id, name: 'John'}

})

let _ = 0

// 1. [] access

Object.keys(byId).forEach(id => { _ += byId[id].id })

// 2. direct access

Object.values(byId).forEach(user => { _ += user.id })

And we can also observe how the performance keeps degrading as the object size grows:

我们还可以观察随着对象大小的增长,性能如何不断下降:

// setup:

const USERS_LENGTH = 100_000

What the eff should I do about this? 我该怎么办呢?

As demonstrated above, avoid having to frequently index into large objects. Prefer turning the object into an array beforehand. Organizing your data to have the ID on the model can help, as you can use Object.values() and not have to refer to the key map to get the ID.

如上所述,避免频繁索引大型对象。最好事先将对象转换为数组。组织数据以在模型上包含 ID 会有所帮助,因为您可以使用Object.values()而不必引用键映射来获取 ID。

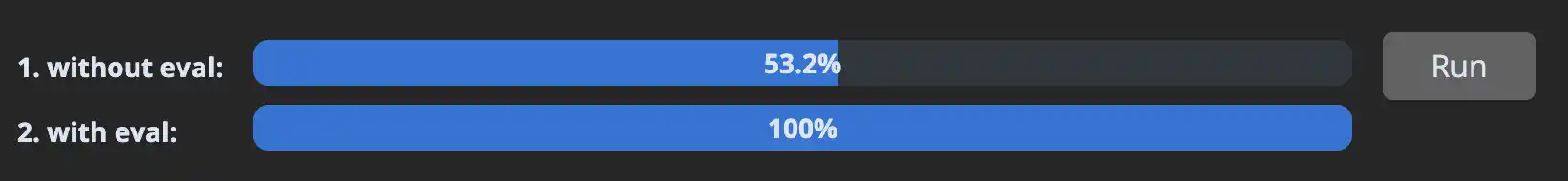

Some javascript patterns are hard to optimize for engines, and by using eval() or its derivatives you can make those patterns disappear. In this example, we can observe how using eval() avoids the cost of creating an object with a dynamic object key:

某些 javascript 模式很难针对引擎进行优化,通过使用eval()或其派生物,您可以使这些模式消失。在此示例中,我们可以观察使用eval()如何避免使用动态对象键创建对象的成本:

// setup:

const key = 'requestId'

const values = Array.from({ length: 100_000 }).fill(42)

// 1. without eval

function createMessages(key, values) {

const messages = []

for (let i = 0; i < values.length; i++) {

messages.push({ [key]: values[i] })

}

return messages

}

createMessages(key, values)

// 2. with eval

function createMessages(key, values) {

const messages = []

const createMessage = new Function('value',

`return { ${JSON.stringify(key)}: value }`

)

for (let i = 0; i < values.length; i++) {

messages.push(createMessage(values[i]))

}

return messages

}

createMessages(key, values)

Another good use-case for eval could be to compile a filter predicate function where you discard the branches that you know will never be taken. In general, any function that is going to be run in a very hot loop is a good candidate for this kind of optimization.

eval的另一个很好的用例可能是编译一个过滤谓词函数,在其中丢弃您知道永远不会被采用的分支。一般来说,任何将在非常热的循环中运行的函数都是这种优化的良好候选者。

Obviously the usual warnings about eval() apply: don’t trust user input, sanitize anything that gets passed into the eval()‘d code, and don’t create any XSS possibility. Also note that some environments don’t allow access to eval(), such as browser pages with a CSP.

显然,有关eval()的常见警告适用:不要信任用户输入,清理传递到eval()代码中的任何内容,并且不要创建任何 XSS 可能性。另请注意,某些环境不允许访问eval() ,例如具有 CSP 的浏览器页面。

We’ve already seen above how strings are more expensive than they appear. Well I have kind of a good news/bad news situation here, which I’ll announce in the only logical order (bad first, good second): strings are more complex than they appear, but they can also be quite efficient used well.

我们已经在上面看到了琴弦的价格比它们看上去的要贵。好吧,我在这里有一个好消息/坏消息的情况,我将按照唯一的逻辑顺序宣布(坏第一,好第二):字符串比它们看起来更复杂,但它们也可以非常有效地使用。

String operations are a core part of JavaScript due to its context. To optimize string-heavy code, engines had to be creative. And by that I mean, they had to represent the String object with multiple string representation in C++, depending on the use case. There are two general cases you should worry about, because they hold true for V8 (the most common engine by far), and generally also in other engines.

由于其上下文,字符串操作是 JavaScript 的核心部分。为了优化大量字符串的代码,引擎必须具有创造性。我的意思是,他们必须根据用例,用 C++ 中的多个字符串表示形式来表示String对象。您应该担心两种常见情况,因为它们适用于 V8(迄今为止最常见的发动机),并且通常也适用于其他发动机。

First, strings concatenated with + don’t create a copy of the two input strings. The operation creates a pointer to each substring. If it was in typescript, it would be something like this:

首先,用+连接的字符串不会创建两个输入字符串的副本。该操作创建一个指向每个子字符串的指针。如果用打字稿写的话,会是这样的:

class String {

abstract value(): char[] {}

}

class BytesString {

constructor(bytes: char[]) {

this.bytes = bytes

}

value() {

return this.bytes

}

}

class ConcatenatedString {

constructor(left: String, right: String) {

this.left = left

this.right = right

}

value() {

return [...this.left.value(), ...this.right.value()]

}

}

function concat(left, right) {

return new ConcatenatedString(left, right)

}

const first = new BytesString(['H', 'e', 'l', 'l', 'o', ' '])

const second = new BytesString(['w', 'o', 'r', 'l', 'd'])

// See ma, no array copies!

const message = concat(first, second)Second, string slices also don’t need to create copies: they can simply point to a range in another string. To continue with the example above:

其次,字符串切片也不需要创建副本:它们可以简单地指向另一个字符串中的范围。继续上面的例子:

class SlicedString {

constructor(source: String, start: number, end: number) {

this.source = source

this.start = start

this.end = end

}

value() {

return this.source.value().slice(this.start, this.end)

}

}

function substring(source, start, end) {

return new SlicedString(source, start, end)

}

// This represents "He", but it still contains no array copies.

// It's a SlicedString to a ConcatenatedString to two BytesString

const firstTwoLetters = substring(message, 0, 2)But here’s the issue: once you need to start mutating those bytes, that’s the moment you start paying copy costs. Let’s say we go back to our String class and try to add a .trimEnd method:

但问题是:一旦您需要开始改变这些字节,那就是您开始支付复制成本的时刻。假设我们回到String类并尝试添加.trimEnd方法:

class Stclass String {

abstract value(): char[] {}

trimEnd() {

// `.value()` here might be calling

// our Sliced->Concatenated->2*Bytes string!

const bytes = this.value()

const result = bytes.slice()

while (result[result.length - 1] === ' ')

result.pop()

return new BytesString(result)

}

}So let’s jump to an example where we compare using operations that use mutation versus only using concatenation:

因此,让我们跳到一个示例,我们比较使用使用变异的操作与仅使用连接的操作:

// setup:

const classNames = ['primary', 'selected', 'active', 'medium']

// 1. mutation

const result =

classNames

.map(c => `button--${c}`)

.join(' ')

// 2. concatenation

const result =

classNames

.map(c => 'button--' + c)

.reduce((acc, c) => acc + ' ' + c, '')

What the eff should I do about this? 我该怎么办呢?

In general, try to avoid mutation for as long as possible. This includes methods such as .trim(), .replace(), etc. Consider how you can avoid those methods. In some engines, string templates can also be slower than +. In V8 at the moment it’s the case, but might not be in the future so as always, benchmark.

一般来说,尽量避免突变。这包括.trim() 、 .replace()等方法。考虑如何避免这些方法。在某些引擎中,字符串模板也可能比+慢。目前在 V8 中就是这种情况,但将来可能不会,所以一如既往地进行基准测试。

A note on SlicedString above, you should note that if a small substring to a very large string is alive in memory, it might prevent the garbage collector from collecting the large string! If you’re processing large texts and extracting small strings from it, you might be leaking large amounts of memory.

上面关于SlicedString的注释,您应该注意,如果一个非常大的字符串的一个小子字符串在内存中存在,它可能防止垃圾收集器收集大字符串!如果您正在处理大文本并从中提取小字符串,则可能会泄漏大量内存。

const large = Array.from({ length: 10_000 }).map(() => 'string').join('')

const small = large.slice(0, 50)

// ^ will keep `large` aliveThe solution here is to use mutation methods to our advantage. If we use one of them on small, it will force a copy, and the old pointer to large will be lost:

这里的解决方案是使用对我们有利的突变方法。如果我们在small上使用其中一个,它将强制进行复制,并且指向large旧指针将丢失:

// replace a token that doesn't exist

const small = small.replace('#'.repeat(small.length + 1), '')For more details, see string.h on V8 or JSString.h on JavaScriptCore.

有关更多详细信息,请参阅V8 上的 string.h或JavaScriptCore 上的 JSString.h 。

On strings complexity 关于字符串的复杂性

I have skimmed very quickly over things, but there are a lot of implementation details that add complexity to strings. There are often minimum lengths for each of those string representations. For example a concatenated string might not be used for very small strings. Or sometimes there are limits, for example avoiding pointing to a substring of a substring. Reading the C++ files linked above gives a good overview of the implementation details, even if just reading the comments.

我很快浏览了一遍,但是有很多实现细节增加了字符串的复杂性。每个字符串表示形式通常都有最小长度。例如,连接字符串可能不适用于非常小的字符串。或者有时存在限制,例如避免指向子字符串的子字符串。阅读上面链接的 C++ 文件可以很好地概述实现细节,即使只是阅读注释也是如此。

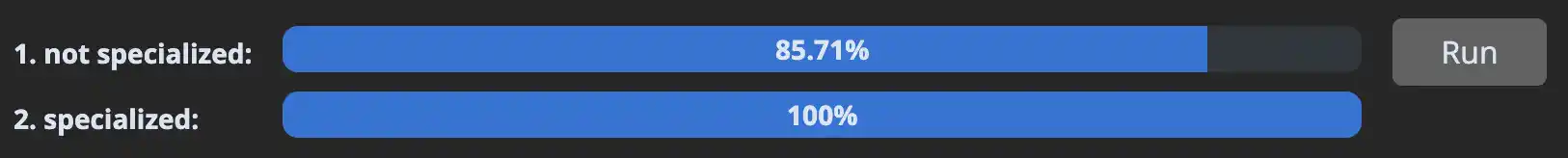

One important concept in performance optimization is specialization: adapting your logic to fit in the constraints of your particular use-case. This usually means figuring out what conditions are likely to be true for your case, and coding for those conditions.

性能优化中的一个重要概念是专业化:调整您的逻辑以适应特定用例的约束。这通常意味着弄清楚哪些条件可能适合您的情况,并针对这些条件进行编码。

Let’s say we are a merchant that sometimes needs to add tags to their product list. We know from experience that our tags are usually empty. Knowing that information, we can specialize our function for that case:

假设我们是一个商家,有时需要将标签添加到他们的产品列表中。根据经验我们知道我们的标签通常是空的。知道这些信息后,我们可以针对这种情况专门化我们的函数:

// setup:

const descriptions = ['apples', 'oranges', 'bananas', 'seven']

const someTags = {

apples: '::promotion::',

}

const noTags = {}

// Turn the products into a string, with their tags if applicable

function productsToString(description, tags) {

let result = ''

description.forEach(product => {

result += product

if (tags[product]) result += tags[product]

result += ', '

})

return result

}

// Specialize it now

function productsToStringSpecialized(description, tags) {

// We know that `tags` is likely to be empty, so we check

// once ahead of time, and then we can remove the `if` check

// from the inner loop

if (isEmpty(tags)) {

let result = ''

description.forEach(product => {

result += product + ', '

})

return result

} else {

let result = ''

description.forEach(product => {

result += product

if (tags[product]) result += tags[product]

result += ', '

})

return result

}

}

function isEmpty(o) { for (let _ in o) { return false } return true }

// 1. not specialized

for (let i = 0; i < 100; i++) {

productsToString(descriptions, someTags)

productsToString(descriptions, noTags)

productsToString(descriptions, noTags)

productsToString(descriptions, noTags)

productsToString(descriptions, noTags)

}

// 2. specialized

for (let i = 0; i < 100; i++) {

productsToStringSpecialized(descriptions, someTags)

productsToStringSpecialized(descriptions, noTags)

productsToStringSpecialized(descriptions, noTags)

productsToStringSpecialized(descriptions, noTags)

productsToStringSpecialized(descriptions, noTags)

}

This sort of optimization can give you moderate improvements, but those will add up. They are a nice addition to more crucial optimizations, like shapes and memory I/O. But note that specialization can turn against you if your conditions change, so be careful when applying this one.

这种优化可以给你带来适度的改进,但这些改进会累积起来。它们是对更重要的优化(例如形状和内存 I/O)的一个很好的补充。但请注意,如果您的条件发生变化,专业化可能会对您不利,因此在应用这一专业化时要小心。

Branch prediction and branchless code 分支预测和无分支代码

Removing branches from your code can be incredibly efficient for performance. For more details on what a branch predictor is, read the classic Stack Overflow answer Why is processing a sorted array faster.

从代码中删除分支对于性能来说非常高效。有关分支预测器的更多详细信息,请阅读经典的 Stack Overflow 答案Why isprocessing a Sorted array Faster 。

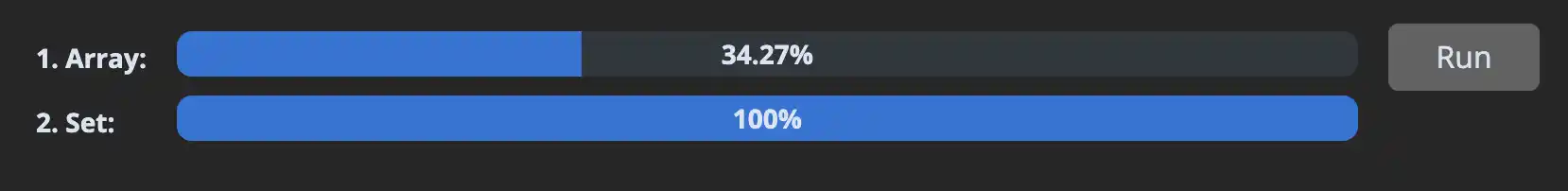

I won’t go in details about data structures as they would require their own post. But be aware that using the incorrect data structures for your use-case can have a bigger impact than any of the optimizations above. I would suggest you to be familiar with the native ones like Map and Set, and to learn about linked lists, priority queues, trees (RB and B+) and tries.

我不会详细介绍数据结构,因为它们需要自己的帖子。但请注意,为您的用例使用不正确的数据结构可能比上述任何优化产生更大的影响。我建议您熟悉原生的Map和Set等,并了解链表、优先级队列、树(RB 和 B+)和尝试。

But for a quick example, let’s compare how Array.includes does against Set.has for a small list:

但作为一个简单的例子,让我们比较一下Array.includes与Set.has对于一个小列表的表现:

// setup:

const userIds = Array.from({ length: 1_000 }).map((_, i) => i)

const adminIdsArray = userIds.slice(0, 10)

const adminIdsSet = new Set(adminIdsArray)

// 1. Array

let _ = 0

for (let i = 0; i < userIds.length; i++) {

if (adminIdsArray.includes(userIds[i])) { _ += 1 }

}

// 2. Set

let _ = 0

for (let i = 0; i < userIds.length; i++) {

if (adminIdsSet.has(userIds[i])) { _ += 1 }

}

As you can see, the data structure choice makes a very impactful difference.

正如您所看到的,数据结构的选择会产生非常大的影响。

As a real-world example, I had a case where we were able to reduce the runtime of a function from 5 seconds to 22 milliseconds by switching out an array with a linked list.

作为一个现实世界的例子,我有一个例子,通过用链表切换数组,我们能够将函数的运行时间从 5 秒减少到 22 毫秒。

I’ve left this section for the end for one reason: I needed to establish credibility with the fun sections above. Now that I (hopefully) have it, let me tell you that benchmarking is the most important part of optimization. Not only is it the most important, but it’s also hard. Even after 20 years of experience, I still sometimes create benchmarks that are flawed, or use the profiling tools incorrectly. So whatever you do, please put the most effort into benchmarking correctly.

我把这一部分留到最后有一个原因:我需要通过上面有趣的部分建立可信度。现在我(希望)已经掌握了它,让我告诉您基准测试是优化中最重要的部分。不仅是最重要的,而且也是最难的。即使拥有 20 年的经验,我有时仍然会创建有缺陷的基准测试,或者错误地使用分析工具。因此,无论你做什么,请尽最大努力进行正确的基准测试。

Your priority should always be to optimize the function/section of code that makes up the biggest part of your runtime. If you spend time optimizing anything else than the top, you are wasting time.

您的首要任务应该始终是优化构成运行时最大部分的函数/代码部分。如果你花时间优化顶部以外的任何东西,那么你就是在浪费时间。

Run your code in production mode and base your optimizations on those observations. JS engines are very complex, and will often behave differently in micro-benchmarks than in real-world scenarios. For example, take this micro-benchmark:

在生产模式下运行代码并根据这些观察结果进行优化。 JS 引擎非常复杂,并且在微基准测试中的表现通常与在现实场景中不同。例如,采用这个微基准:

const a = { type: 'div', count: 5, }

const b = { type: 'span', count: 10 }

function typeEquals(a, b) {

return a.type === b.type

}

for (let i = 0; i < 100_000; i++) {

typeEquals(a, b)

}If you’ve payed attention sooner, you will realize that the engine will specialize the function for the shape { type: string, count: number }. But does that hold true in your real-world use-case? Are a and b always of that shape, or will you receive any kind of shape? If you receive many shapes in production, this function will behave differently then.

如果你早点注意的话,你会发现引擎会专门针对形状的功能 { type: string, count: number } 。但这在您的实际用例中是否成立? a和b总是这种形状吗?还是你会得到任何形状?如果您在生产中收到许多形状,则此函数的行为将会有所不同。

If you’ve just optimized a function and it now runs 100x faster, doubt it. Try to disprove your results, try it in production mode, throw stuff at it. Similarly, doubt also your tools. The mere fact of observing a benchmark with devtools can modify its behavior.

如果您刚刚优化了某个函数,现在它的运行速度提高了 100 倍,请对此表示怀疑。尝试反驳你的结果,在生产模式下尝试,向它扔东西。同样,也要怀疑你的工具。仅使用开发工具观察基准测试就可以改变其行为。

Different engines will optimize certain patterns better or worse than others. You should benchmark for the engine(s) that are relevant to you, and prioritize which one is more important. Here’s a real-world example in Babel where improving V8 means decreasing JSC’s performance.

不同的引擎会比其他引擎更好或更差地优化某些模式。您应该对与您相关的引擎进行基准测试,并优先考虑哪一个更重要。这是 Babel 中的一个真实示例,其中改进 V8 意味着降低 JSC 的性能。

Various remarks about profiling and devtools.

关于分析和开发工具的各种评论。

If you’re profiling in the browser, make sure you use a clean and empty browser profile. I even use a separate browser for this. If you’re profiling and you have browser extensions enabled, they can mess up the measurements. React devtools in particular will substantially affect results, rendering code may appear slower than it appears in the mirror to your users.

如果您在浏览器中进行分析,请确保使用干净且空的浏览器配置文件。我什至为此使用单独的浏览器。如果您在进行分析时启用了浏览器扩展,它们可能会扰乱测量结果。 React 开发工具尤其会严重影响结果,渲染代码可能会比看起来慢在镜子里给您的用户。

Browser profiling tools are sample-based profilers, which take a sample of your stack at regular intervals. This had a big disadvantage: very small but very frequent functions might be called between those samples, and might be very much underreported in the stack charts you’ll get. Use Firefox devtools with a custom sample interval or Chrome devtools with CPU throttling to mitigate this issue.

浏览器分析工具是基于样本的分析器,它定期对堆栈进行采样。这有一个很大的缺点:在这些样本之间可能会调用非常小但非常频繁的函数,并且在您将得到的堆栈图表中可能会被严重低估。使用具有自定义采样间隔的 Firefox 开发工具或具有 CPU 限制的 Chrome 开发工具来缓解此问题。

Beyond the regular browser devtools, it may help to be aware of these options:

除了常规的浏览器开发工具之外,了解以下选项可能会有所帮助:

Chrome devtools have quite a few experimental flags that can help you figure out why things are slow. The style invalidation tracker is invaluable when you need to debug style/layout recalculations in the browser. https://github.com/iamakulov/devtools-perf-features

Chrome 开发工具有很多实验性标志,可以帮助您找出速度缓慢的原因。当您需要在浏览器中调试样式/布局重新计算时,样式失效跟踪器非常有用。

The deoptexplorer-vscode extension allows you to load V8/chromium log files to understand when your code is triggering deoptimizations, such as when you pass different shapes to a function. You don’t need the extension to read log files, but it makes the experience much more pleasant.https://github.com/microsoft/deoptexplorer-vscode

deoptexplorer-vscode 扩展允许您加载 V8/chromium 日志文件,以了解代码何时触发去优化,例如何时将不同的形状传递给函数。您不需要扩展来读取日志文件,但它使体验更加愉快。

You can always compile the debug shell for each JS engine to understand more in details how it works. This allows you to run perf and other low-level tools, and also to inspect the bytecode and machine code generated by each engine.

您始终可以为每个 JS 引擎编译调试 shell,以更详细地了解其工作原理。这允许您运行perf和其他低级工具,还可以检查每个引擎生成的字节码和机器代码。

Example for V8 | Example for JSC | Example for SpiderMonkey (missing)

V8 示例| JSC 示例| SpiderMonkey 的示例(缺失)

Hope you learned some useful tricks. If you have any comments, corrections or questions, email in the footer. I’m always happy to receive feedback or questions from readers.

希望您学到一些有用的技巧。如果您有任何意见、更正或疑问,请在页脚中发送电子邮件。我总是很高兴收到读者的反馈或问题。